Wiki

Clone wikiavro_from_delimited / UseCase4

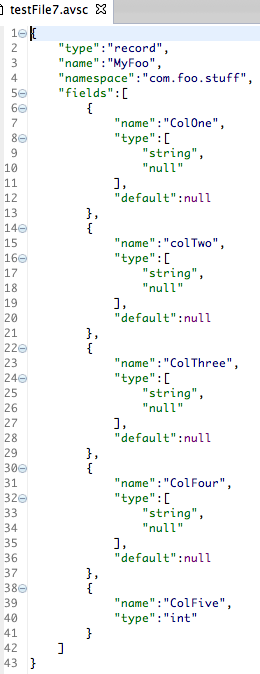

Use Case 4 - Validate Column Heads Against Given Schema

Here's where the use cases begin to get more realistic. Your Camel or Fuse or other integration server is bringing you a daily file, you've got it set up to suck in the file to a specific and predetermined schema, but your vendor who provides you with the file has a habit of changing schemas on you without even letting you know.

The only thing you have to catch any changes is the first line of every file, which has the column heading names to correlate with your existing schema.

So we're back to the same input file as use case 1.

ColOne ,colTwo, ColThree,ColFour, ColFive 898sdf,,sdf,aaaaa,89343 13534,34352,sdf,aa,89443

Here's the API is run (as we run it in the test).

boolean args, in order:

- boolean firstLineColHeads

- boolean validateSchemaFromFirstLineColHeads

- boolean exceptionOnBadData

@Test public void testUseCase3() { DelimToAvro delimToAvro = new DelimToAvro(); File targetFile = new File("target/deleteme.avro"); File inputFile = new File("./src/test/resources/testFile1.csv"); File schemaFile = new File("./src/test/resources/testFile7.avsc"); delimToAvro.get(inputFile.toURI(), schemaFile.toURI(), targetFile, ",", true, true, false); }

What does it produce?

Once again, the data is encapsulated in an avro file. But if there is a material change in the column heads, such as the vendor adding a new column, an exception will be thrown, and the data file never processed.

It will be up to your integration server to know what to do from there, because we shouldn't automate something as sensitive as what to do with the new column.

Updated