Details

-

Suggestion

-

Resolution: Fixed

Description

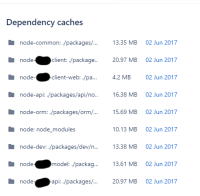

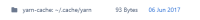

Is there a way of storing dependencies (in my case python packages) between builds à la travis? (https://docs.travis-ci.com/user/caching/). Or if not is there any other similar way to speed up the builds?