Wiki

Clone wikienmap-box-idl / imageSVM Regression - Manual for Application

Concept of imageSVM

imageSVM is an IDL based tool for the support vector machine (SVM) classification and regression analysis of remote sensing image data. Its workflow allows a flexible and transparent use of the support vector concept for both simple and advanced classification/regression approaches.

The goal of imageSVM is to advance the use of the support vector concept in the field of remote sensing image analysis. To achieve this goal the following objectives were defined: (1) create a platform and license independent implementation for SVM classification and regression; (2) enable the use of common image file formats for data in- and output, including training and validation data; (3) integrate a widely accepted, powerful algorithm for the training of the SVM that is open-source and updated by machine learning specialist on a regular basis; (4) offer alternative workflows for automized parameterization (by default values) and user-defined parameterization (limited to parameters relevant for remote sensing); (5) visualize training parameters and intermediate results in a transparent workflow to increase the understanding and acceptance of the support vector approach in the remote sensing community.

imageSVM uses LIBSVM (Chang and Lin 2001) during the training of the SVM. LIBSVM is a tool for support vector classification, regression and distribution estimation. It is open-source and freely available. It was developed in 2001 and is updated and improved on a regular basis. Currently, LIBSVM version 2.88 is implemented in imageSVM through the IDL Java Bridge.

imageSVM is developed as a non-commercial product at the Geomatics Lab of Humboldt-Universität zu Berlin. By distributing the tool, the authors hope to enlarge the number of applications with imageSVM and this way learn more about its performance, its strengths and weaknesses. This is especially important against the background of the great variety of data types, e.g. hyperspectral, SAR, or multi-temporal, and the different fields of applications, e.g. aquatic, urban, or geological. The code is updated regularly to further improve the performance. Experiences from users will be considered, e.g. for default values, but also during methodological development. Users of imageSVM are hence strongly encouraged (- expected -) to report their experiences and problems.

imageSVM is programmed in IDL. It can be run using the IDL Virtual MachineTM or the EnMAP-Box and does not require a license of IDL or ENVI.

At the moment, imageSVM uses generic image file formats with an ENVI type header as used by the EnMAP-Box (Version 2.1, see Data Format Definition). These formats are used for remote sensing image data as well as for the input of reference data. The generation of reference data has to be performed outside of imageSVM. Please refer to section Data Type of this manual for information on file formats.

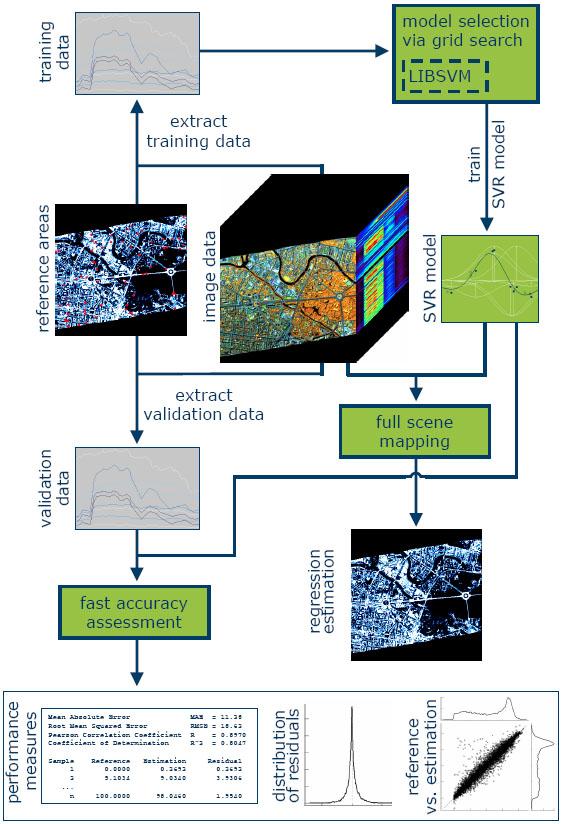

The classification/regression of image data in imageSVM comprises a two step approach consisting of (1) the parameterization of a support vector classifier (SVC) or support vector regression (SVR) based on reference data, and (2) the classification/regression of the image data itself (compare following figure). By splitting-up the workflow into these two steps, different data sets can be used for parameterization and image classification/regression in order to save processing time or allow the processing of multiple images. Moreover, the general idea of machine learning is brought into minds: any supervised classification/regression creates an instance of the algorithm fitted to the reference data in a training process. The idea behind this concept of machine learning is important when accuracy, reliability and transferability of results are discussed.

During the parameterization of the SVC/SVR advanced users are free to manipulate the parameters and in this way enhance the processing speed, generality of results and sometimes accuracy of the approach.

Moreover, imageSVM 3.0 offers the possibility to perform a selection of features during regression and classification. A very powerful algorithm for directly exploring the relationship between classification/regression accuracy and selected features is the so-called wrapper approach in combination with a stepwise forward selection/backward eliminations heuristic for selecting relevant features. During forward selection, the approach begins with an empty feature set and SVM are trained for each single feature. The feature corresponding to the best performing SVM is then selected. In the second iteration, SVM are trained for each pair of features consisting of the previously best performing feature and one additional feature. Again, the pair of features corresponding to the best performing SVM are than selected. This step is repeated until all features are selected or a user defined stopping criterion is reached. This results in a ranked list of features with corresponding performances. Backward elimination, on the other hand, starts with the full set of features and iteratively takes out the feature that contributes least to the SVM’s accuracy. Both options are implemented in imageSVM 3.0.

Background

Support vector machines are one of the more recent developments in the field of machine learning. They showed to be reliable and accurate in many classification problems and have become a first choice algorithm for many remote sensing users. For a detailed introduction into support vector machines and the underlying concepts please refer to Vapnik (1998), Burges (1998), Smola (1998) or to relevant descriptions of SVM in the remote sensing context (Foody and Mathur 2004; Huang et al. 2002; Melgani and Bruzzone 2004; Waske and van der Linden 2008). At this point only a brief overview shall be given:

The support vector machine is a universal learning machine for solving classification or regression problems (Smola and Schoelkopf 1998; Vapnik 1998) and can be seen as an implementation of Vapnik's Structural Risk Minimization principle. Its strength is the ability to model complex, non-linear class boundaries – or in the case of regression the relation between dependent variables – in high dimensional feature spaces through the use of kernel functions and regularization.

In general, SVM for regression estimate a linear dependency by fitting an optimal approximating hyperplane to the training data in the multi-dimensional feature space. For linearly not approximable problems, the training data are implicitly mapped by a kernel function into a higher dimensional space, wherein the new data distribution enables a better fitting of a linear hyperplane that appears non-linear in the original feature space. The parameterization of an SVR requires the user to select the parameter(s) of the kernel function (g) as well as a regularization (C) and loss function (ε) parameters. Once these parameters have been selected, a quadratic optimization problem is solved to construct the optimal approximating hyperplane. The solution of this optimization problem is a vector of weights, one for each training data vector (i.e. pixel). Only those training data vectors with non-zero weight are needed to define the optimal approximating hyperplane, i.e. the support vectors.

In remote sensing applications the Gaussian radial basis function kernel K(x,xi) = exp(−g|x−xi|2) showed to be effective with reasonable processing times. It requires the selection of the parameter g that defines the width of the Gaussian kernel function. The Gaussian kernel is a so-called universal kernel, thus an SVR with this kernel can model any relationship at any precision. A regularization parameter C determines the tradeoff between the flatness of the regression function and the amount up to which deviations larger than ε are tolerated.

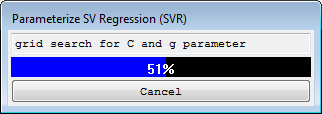

Both parameters, the g and C, depend on the data range and distribution and are thus case specific. A common strategy to search for adequate values for g and C is a two-dimensional grid search with internal validation. This strategy is implemented in imageSVM. For tuning the loss function parameter ε we use a efficient search heuristic proposed by Rabe et al. (2013).

User guide

Data Type

The current version of imageSVM is delivered with the EnMAP-Box version 2.1. It is therefore compatible with all data types that can be loaded into the EnMAP-Box. Please refer to the EnMAP-Box Manual for further information.

In general, the EnMAP-Box version 2.1 and imageSVM 3.0 require input files to be stored in a generic file format with ENVI style header file. Accordingly, images, masks, and reference areas used during processing have to be provided as binary files with an ASCII header.

ENVI can be used to convert external files into the ENVI standard file type, which is read by the EnMAP-Box. If the image is open in ENVI, select File > Save As from the main menu bar. Select the Input File, click OK, and define as Output File ENVI.

Parameterize SV Regression Model (SVR)

The parameterization of an SVR, which in the terminology of imageSVM refers to a completely parameterized support vector model for a certain regression problem, requires the definition of the kernel parameter g and the regularization parameter C. Ideal values for these parameters depend on the distribution of the classes in the feature space. It is hence useful to test ranges of parameters using a grid search with internal performance estimation. In doing so, combinations of g and C are tested and those parameters with the best performance (e.g. based on a cross validation), are used for the training of the final SVR. In imageSVM, the user can decide to parameterize the SVR using either default or advanced settings.

Default settings

Default values for the grid search will be used to find ideal parameter values for g and C. In the test data set provided with the EnMAP-Box you will find hyperspectral imagery with classification reference values.

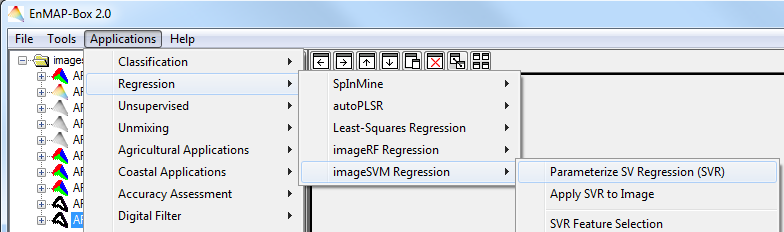

- Select File > Open > EnMAP-Box Test Images.

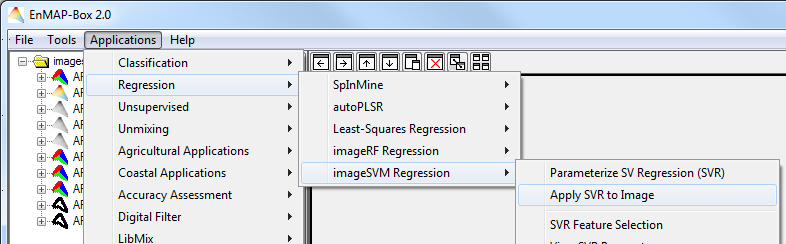

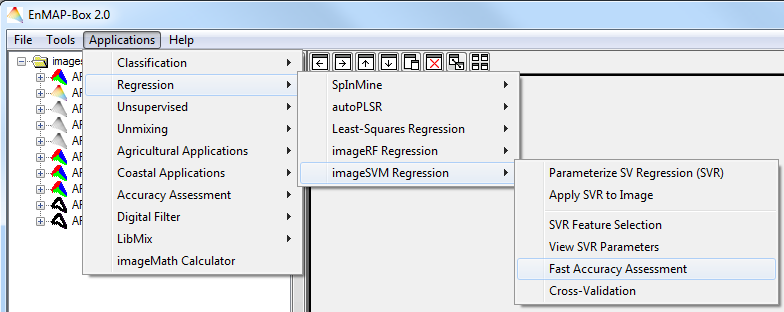

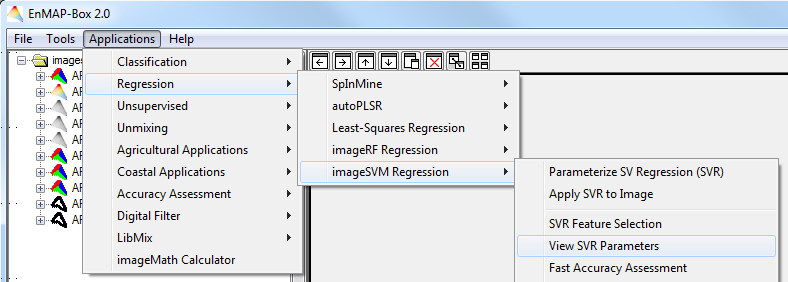

- Now select Applications > Regression > imageSVM Regression > Parameterize SV Regression (SVR).

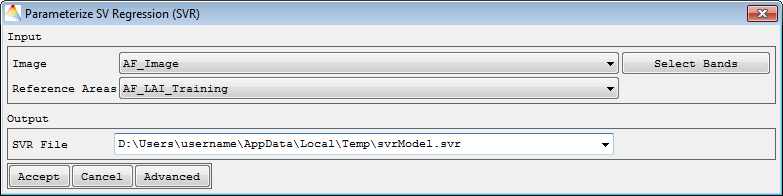

- Choose the Input Image, in this case ‘AF_Image’. On the right side you find a button to Select Bands, where it is possible to select a spectral subset of the image.

- Now select the file specifying the Reference Areas for the training, e.g. ‘AF_LAI_Training’. Then define where to save the *.svr file, by default the temporary folder is selected.

- The default values can be examined by clicking Advanced (for more information see next section). In most cases, default values already lead to high accuracies.

Click Accept when you are finished.

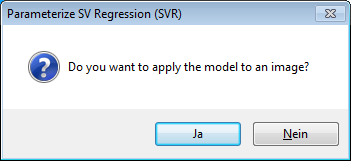

An *.svr file will be written to disc. After parameterization, you are asked if you want to immediately apply the model to an image, and also a report on the generated SVR file will open in your HTML browser (explained further in section View SVR Parameters). If you click yes, you immediately proceed with the regression described in section Apply SVR to Image.

Advanced Settings

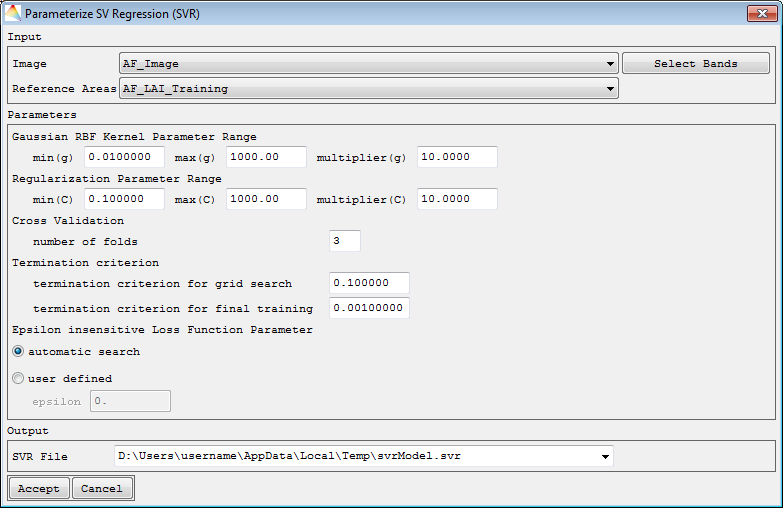

- Again select Applications > Regression > imageSVM Regression > Parameterize SV Regression (SVR). Click Advanced to continue with the advanced settings. The SVR parameterization dialog is now expanded.

Here, the user is allowed to modify the grid search and to select

- min (g/C), max (g/C): Minimum and maximum values that define the range of the grid (g and C dimension).

- Multiplier (g/C): Specifies the step size of the grid. For high numbers of features, e.g. in hyperspectral data, and high numbers of training samples, the time needed for the grid search might be relatively high. In this case it might be useful to increase the the multiplier from 10 to 100 and investigate the performance surface before repeating the search for the relevant ranges with a smaller multiplier.

- Cross Validation: The accuracy of results during the grid search is monitored by n-fold cross validation on the training data. The number of folds might be increased depending on the heterogeneity of your data. This will, however, increase the time needed for the grid search.

- termination criterion for grid search: During the grid search a value of 0.1 proved sufficient to select the best pair of parameters, however it can be changed.

- termination criterion for final training: For the training of the SVC using the best parameters or user-defined values a default value for termination of 0.001 is selected and can also be changed.

On this basis, ideal parameter values for g and C will be found.

- Specify the SVR File name and path for the Output. By default, the name “svrModel.svr” and the temporary folder are proposed. Click Accept when you are finished with the advanced settings.

A parameterized support vector regression file (*.svr) will be written to disc, again a report will open (see section View SVR Parameters) and you are asked if you want to immediately apply the model to the image.

Apply SVR to Image

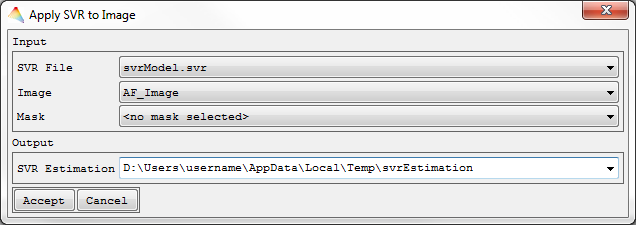

After successfully parameterizing the SVR, the user can apply the SVR to an image file.

- From the imageSVM Regression menu, select Apply SVR to Image.

- Select the generated *.svr File and the Image. Optional: Apply a Mask.

- Define where to save the output SVR Estimation and click Accept when you are finished.

Your svrEstimation will appear in the Filelist.

Fast Accuracy Assessment

For methodological studies it might be interesting to perform different trainings and only compare results based on a set of independent validation points, not the entire regression image. By using the Fast Accuracy Assessment tool, only the reference areas used for the independent validation will be estimated on the basis of the SVR. In addition the MAE, RSME and r2 are reported.

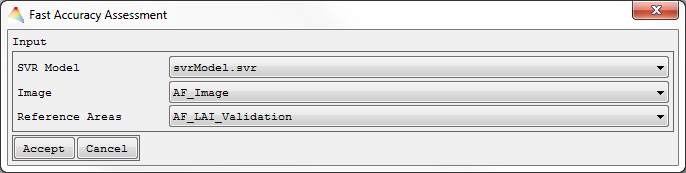

- From the imageSVM Regression menu, select Fast Accuracy Assessment.

- Specify the SVR Model you want to assess, on which Image it shall be applied and define the Reference Areas for independent validation.

- Click Accept.

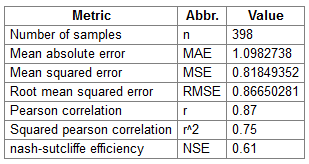

The report with the results will open in your HTML browser, in which the following information are displayed.

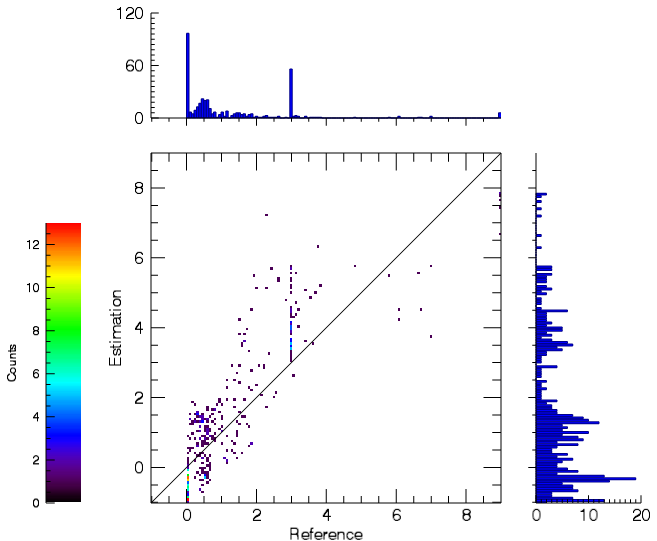

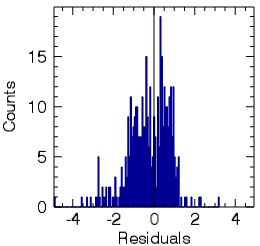

A table provides a textual report of the residual statistics, including the values for MAE, RMSE and r2.

The scatter plot shows values of the validation reference against the SVR estimation. Ideally they are positioned along the diagonal, which would mean that all estimated values are equal to the reference values.

The last graph shows the probability density of the residuals. It is calculated by subtracting the values of the reference from the values of the estimation. The position 0 is equal to the diagonal in the previous graph.

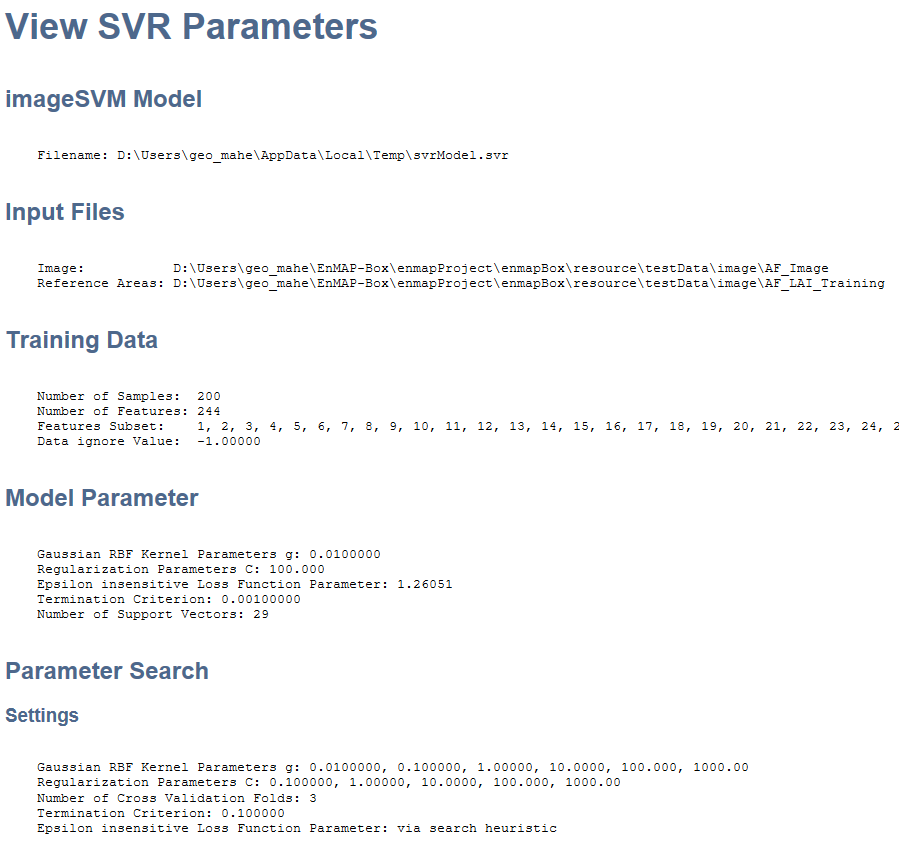

View SVR parameters

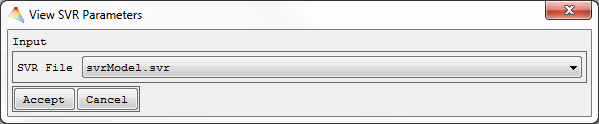

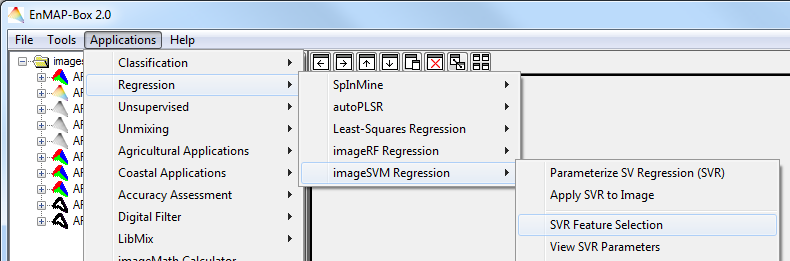

- The report opens up automatically after successful parameterization. However, it can also be opened manually by selecting Applications > imageSVM Regression > View SVR Parameters.

- The following dialog asks you to specify the SVR file for which information will be displayed.

Accept, and your HTML browser will open with information on

- imageSVM Model: path and name.

- Input Files: paths and names.

- Training Data: Information on Number of Samples (reference pixels), Number of Features (bands in the input image), Features Subset (selected bands, default=all) and Data ignore Value.

- Model Parameter: the selected optimal parameters g and C, the Epsilon insensitive Loss Function Parameter, the Termination Criterion and Number of Support Vectors.

- Parameter Search: information on the grid search settings.

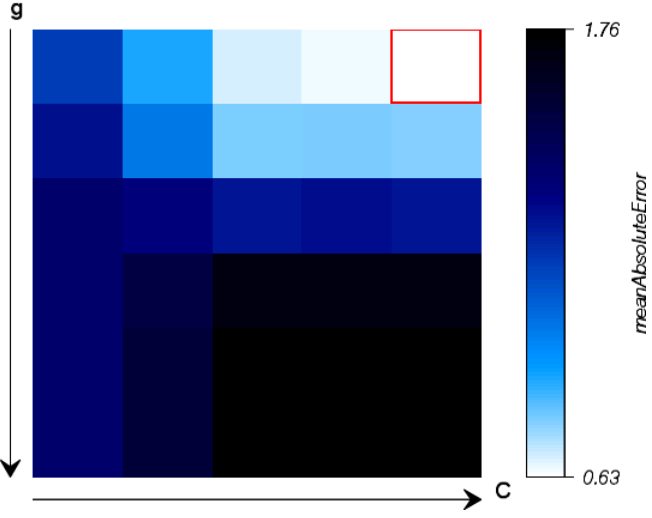

- The Search History with the Performance Surface plot illustrates calculated values of the performance estimator mean absolute error for all kernel and regularisation parameter combinations which were tested during grid search.

The performance surface helps both to easily reconstruct and evaluate the grid search performance. In general, good parameter combinations appear bright. You should, however, also check the performance estimator values (e.g. the best measure will always appear bright but its value can be relative low). In case of a poor performance, it might be useful to repeat the grid search with new ranges and step sizes. This can be done by widening the range towards values where higher performance measure values are expected. You can also exclude combinations with low performance estimation and in this way save processing time or decrease the step size for regions with high performance measures for a more detailed search.

SVR Feature Selection

One further option implemented in imageSVM 3.0 is an SVM based wrapper approach for Feature Selection (Kohavi and John 1997). The use of a smaller number of features may result in a non-inferior accuracy compared to the use of larger feature sets, and provides potential advantages regarding data storage and computational processing costs (Foody 2010). In imageSVM 3.0 a subset of (the most important) features within hyperspectral images can be selected either for image regression (or image classification). Therefore, a previously built SVR (or SVC) model, respectively, is required. After a model file is selected, the Search Strategy and Performance Measure have to be defined. In addition, the Number of Folds for Cross Validation (Performance Estimator) can be modified and if Independent Validation is desired or not. In this way, the features of hyperspectral images can be ranked after their importance and unimportant features can be discarded.

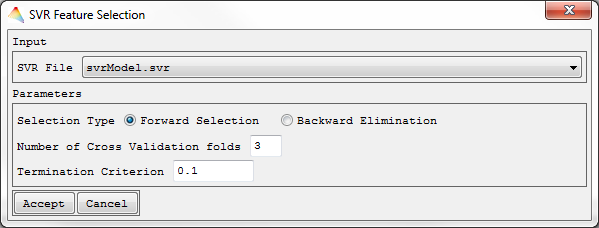

- From the imageSVM Regression menu, select SVR Feature Selection.

In the appearing dialog the previously created SVC File is already selected, or choose a different one.

- Now choose the Selection Type:

- Forward Selection - During forward selection, the approach begins with an empty feature set and SVM are trained for each single feature. The feature corresponding to the best performing SVM is then selected. In the second iteration, SVM are trained for each pair of features consisting of the previously best performing feature and one additional feature. Again, the pair of features corresponding to the best performing SVM is than selected. This step is repeated until all features are selected or a user defined stopping criterion is reached. This results in a ranked list of features with corresponding performances.

- Backward Elimination – Here, on the other hand, it starts with the full set of features and iteratively takes out the feature that contributes least to the SVM’s accuracy.

- Define the Number of Cross Validation folds, by default 3 is set.

- Define the Termination Criterion which is by default set to 0.1.

- Click Accept.

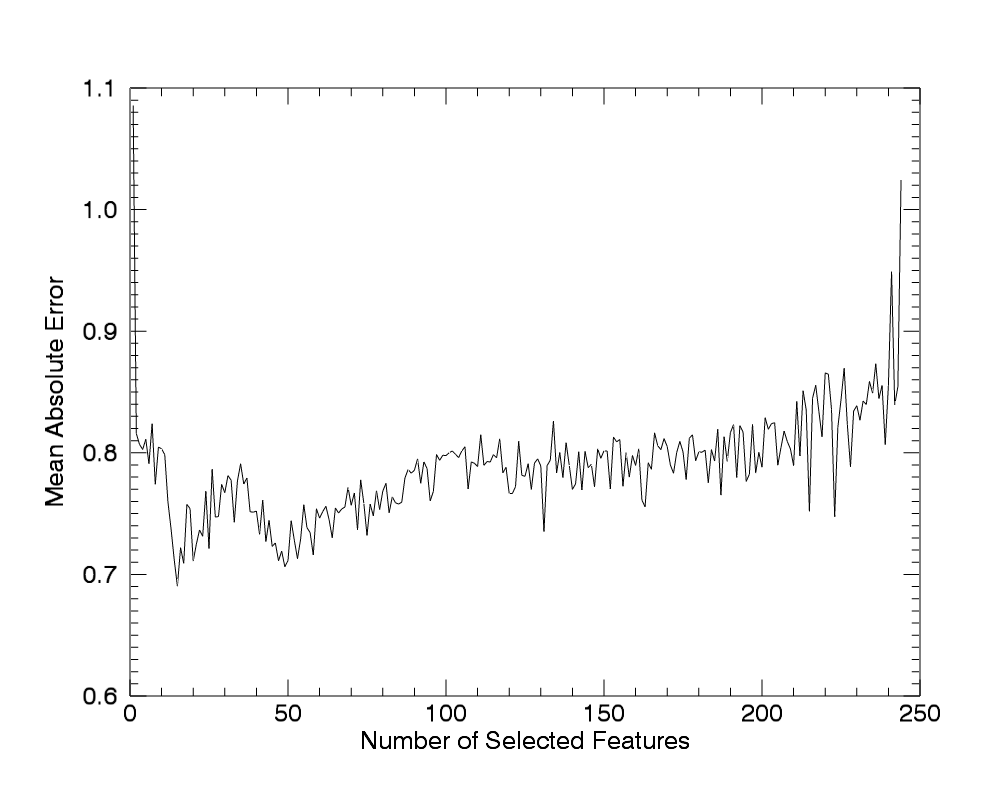

The Mean Absolute Error is used for the internal performance evaluation, i.e. for the decision of the next feature to select/eliminate. After the chosen options have been accepted the feature selection will be calculated. The duration of the process depends on the number of features and training samples and may take up to several hours in case of hyperspectral imagery. The results are presented in an HTML report and will open up in your browser.

In the report, the model file used and the selected parameters are shown, then the Feature Ranking is listed with a learning curve giving a graphical representation of the results. The Search History of each iteration is tabulated. In each table, the selected features/feature subsets are listed with their performances. Furthermore the Best Performing Subset up to this iteration and the Selected Feature of the current iteration are listed.

References

Burges, C.J.C. (1998). A tutorial on Support Vector Machines for pattern recognition. Data Mining and Knowledge Discovery, 2, 121-167

Chang, C.-C., & Lin, C.-J. (2001). LIBSVM: a library for support vector machines. In ACM Transactions on Intelligent Systems and Technology (TIST) 2 (3), 27.

Foody, G.M., & Mathur, A. (2004). A relative evaluation of multiclass image classification by support vector machines. IEEE Transactions on Geoscience and Remote Sensing, 42, 1335-1343

Foody, G.M.P., M. (2010). Feature Selection for Classification of Hyperspectral Data by SVM. IEEE Transactions on Geoscience and Remote Sensing, 48, 2297-2307

Huang, C., Davis, L.S., & Townshend, J.R.G. (2002). An assessment of support vector machines for land cover classification. International Journal of Remote Sensing, 23, 725-749

Kohavi, R., & John, G.H. (1997). Wrappers for feature subset selection. Artificial Intelligence, 97, 273-324

Melgani, F., & Bruzzone, L. (2004). Classification of hyperspectral remote sensing images with support vector machines. IEEE Transactions on Geoscience and Remote Sensing, 42, 1778-1790

Rabe, A., Jakimow, B., Van der Linden, S., Okujeni, A., Suess, S., Leitao, P., & Hostert, P. (2013). Simplifying Support Vector Regression Parameterisation by Heuristic Search for Optimal e-Loss. In, 8th EARSeL Workshop of Special Interest Group in Imaging Spectroscopy. Nantes, France

Smola, A., & Schoelkopf, B. (1998). A Tutorial on Support Vector Regression

Vapnik, V.N. (1998). Statistical Learning Theory. New York: Wiley

Waske, B., & van der Linden, S. (2008). Classifying multilevel imagery from SAR and optical sensors by decision fusion. IEEE Transactions on Geoscience and Remote Sensing, 46, 1457-1466

6 License agreements Redistribution and use of imageSVM in source and binary forms, with or without modification, are permitted provided that the following conditions are met: 1. Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer. 2. Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution. 3. Neither name of copyright holders nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission. THE SOFTWARE "image SVM" IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE REGENTS OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE. imageSVM requires LIBSVM by Chih-Chung Chang and Chih-Jen Lin. LIBSVM is available at http://www.csie.ntu.edu.tw/~cjlin/libsvm. [Please note the separate copyright statement for LIBSVM]

Updated