Wiki

Clone wikized-avnet / OpenAMP

OpenAMP

-

Open AMP(Asymmetrical Multi Processing) is a Open-source software framework for developing AMP systems application software

-

This notebook follows somehow the Xilinx reference documentation UG1186 which addresses the ZynQ7k and ZynQMP hardware platforms

-

Specific stuff related with the 2019.2 PetaLinux build can be checked on the atlassian reference pages here

Linux RemoteProc

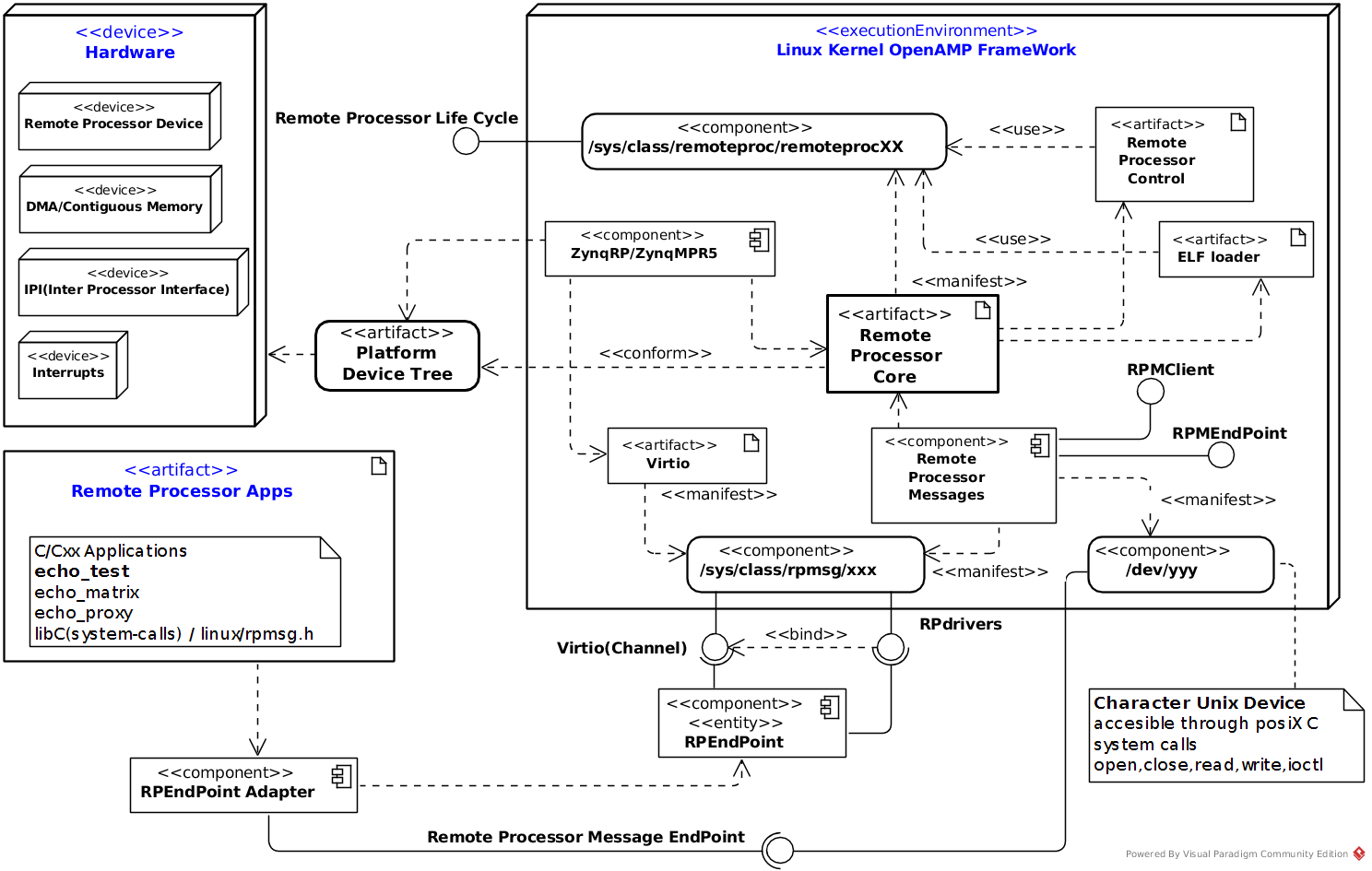

It's the Linux kernel framework to control/monitor asymmetric multiprocessing (AMP) configurations, which may be running different instances of operating system, whether it's Linux or any other flavor of real-time OS using a convenient set of hardware abstractions.

Picture below shows the software layout of main GNULinux components including the specific support for the Xilinx devices

Linux Device Trees for Remote Processor support

The remote processor resources are imported from the Linux system .dtb. So, the Linux platform device tree blobs must contain the requirements for any AMP system

ZynQ-7k

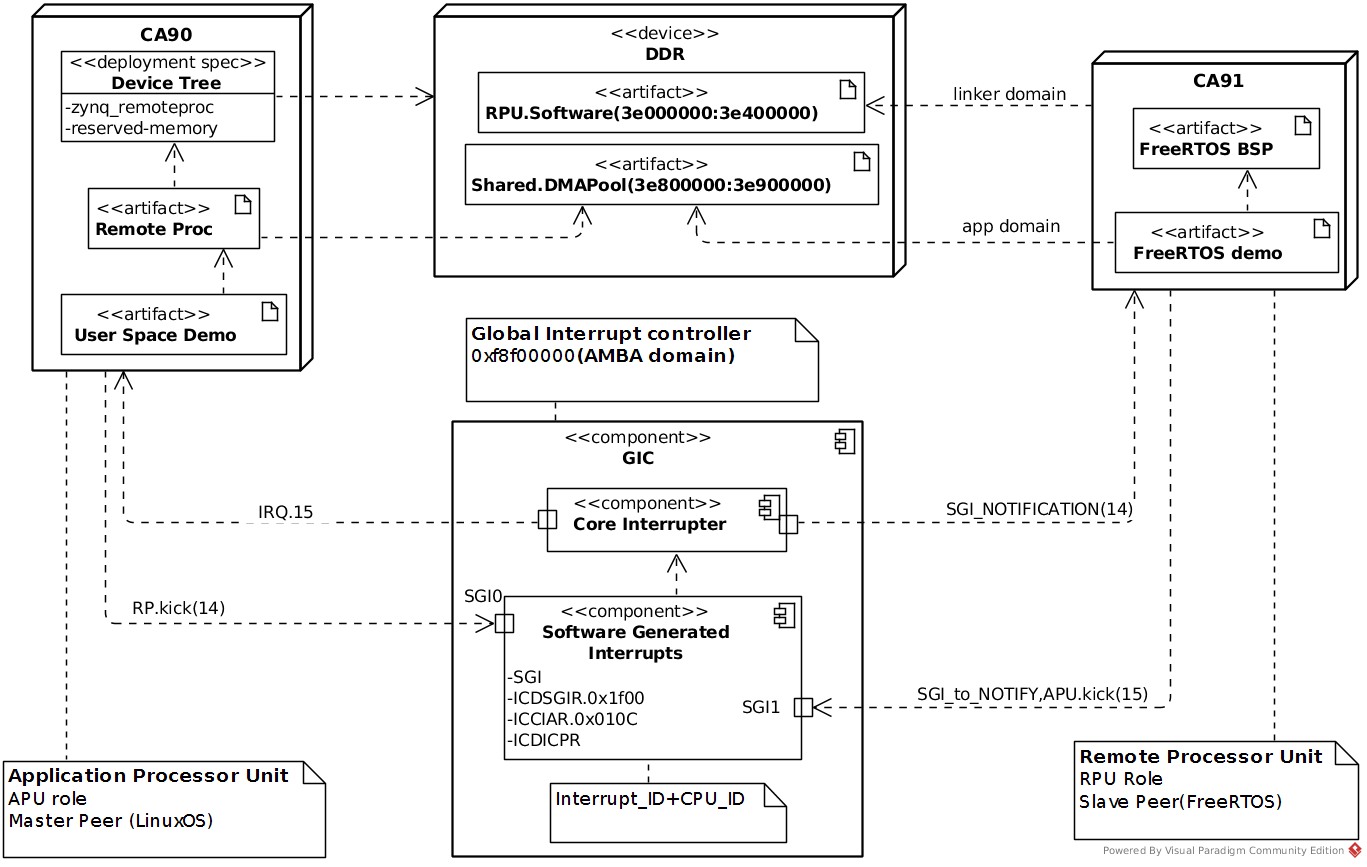

The numerology related with memory addresses and interrupts corresponds with the reference examples provided on OpenAMP and Xilinx

The system/Linux device tree must include the next sections related with above AMP proposal

... reserved-memory { #address-cells = <1>; #size-cells = <1>; ranges; rproc_0_reserved: rproc@3e000000 { no-map; reg = <0x3e000000 0x400000>; }; rproc_0_dma: rproc@3e800000 { no-map; compatible = "shared-dma-pool"; reg = <0x3e800000 0x100000>; }; }; SlaveBMA91 { compatible = "xlnx,zynq_remoteproc"; vring0 = <15>; vring1 = <14>; memory-region = <&rproc_0_reserved>, <&rproc_0_dma>; status="okay"; firmware="firmware"; }; ....

root@QEmuZynQ:~# dmesg | grep remote [ 7.564144] zynq_remoteproc SlaveBMA91: assigned reserved memory node rproc@3e800000 [ 7.569532] remoteproc remoteproc0: SlaveBMA91 is available

root@QEmuZynQ:~# ls /sys/class/remoteproc/remoteproc0/ -al drwxr-xr-x 3 root root 0 Jan 1 1970 . drwxr-xr-x 3 root root 0 Jan 1 1970 .. lrwxrwxrwx 1 root root 0 Feb 10 14:51 device -> ../../../SlaveBMA91 -rw-r--r-- 1 root root 4096 Feb 10 14:51 firmware drwxr-xr-x 2 root root 0 Feb 10 14:51 power -rw-r--r-- 1 root root 4096 Feb 10 14:51 state lrwxrwxrwx 1 root root 0 Feb 10 14:51 subsystem -> ../../../../../class/remoteproc -rw-r--r-- 1 root root 4096 Jan 1 1970 uevent

ZynqQMP

Below the main hardware resources required to implement the reference application note

In this case the system/Linux device tree / must be populated with sections like below which are related with the remote processors R5.0/R5.1 resources (system memory,ipi and interrupts)

/include/ "system-conf.dtsi" / { reserved-memory { #address-cells = <2>; #size-cells = <2>; ranges; rproc_0_dma: rproc@3ed40000 { no-map; compatible = "shared-dma-pool"; reg = <0x0 0x3ed40000 0x0 0x100000>; }; rproc_0_reserved: rproc@3ed00000 { no-map; reg = <0x0 0x3ed00000 0x0 0x40000>; }; rproc_1_dma: rproc@3ef40000 { no-map; compatible = "shared-dma-pool"; reg = <0x0 0x3ef40000 0x0 0x100000>; }; rproc_1_reserved: rproc@3ef00000 { no-map; reg = <0x0 0x3ef00000 0x0 0x40000>; }; }; zynqmp-rpu { compatible = "xlnx,zynqmp-r5-remoteproc-1.0"; core_conf = "split"; #address-cells = <2>; #size-cells = <2>; ranges; r5_0: r5@0 { #address-cells = <2>; #size-cells = <2>; ranges; memory-region = <&rproc_0_reserved>, <&rproc_0_dma>; pnode-id = <0x18110005>; mboxes = <&ipi_mailbox_rpu0 0>, <&ipi_mailbox_rpu0 1>; mbox-names = "tx", "rx"; tcm_0_a: tcm_0@0 { reg = <0x0 0xFFE00000 0x0 0x10000>; pnode-id = <0x1831800b>; }; tcm_0_b: tcm_0@1 { reg = <0x0 0xFFE20000 0x0 0x10000>; pnode-id = <0x1831800c>; }; }; r5_1: r5@1 { #address-cells = <2>; #size-cells = <2>; ranges; memory-region = <&rproc_1_reserved>, <&rproc_1_dma>; pnode-id = <0x18110006>; mboxes = <&ipi_mailbox_rpu1 0>, <&ipi_mailbox_rpu1 1>; mbox-names = "tx", "rx"; tcm_1_a: tcm_1@0 { reg = <0x0 0xFFE90000 0x0 0x10000>; pnode-id = <0x603060d>; }; tcm_1_b: tcm_1@1 { reg = <0x0 0xFFEB0000 0x0 0x10000>; pnode-id = <0x603060e>; }; }; }; zynqmp_ipi1 { compatible = "xlnx,zynqmp-ipi-mailbox"; interrupt-parent = <&gic>; interrupts = <0 33 4>; xlnx,ipi-id = <5>; #address-cells = <1>; #size-cells = <1>; ranges; /* APU<->RPU0 IPI mailbox controller */ ipi_mailbox_rpu0: mailbox@ff990600 { reg = <0xff3f0ac0 0x20>, <0xff3f0ae0 0x20>, <0xff3f0740 0x20>, <0xff3f0760 0x20>; reg-names = "local_request_region", "local_response_region", "remote_request_region", "remote_response_region"; #mbox-cells = <1>; xlnx,ipi-id = <3>; }; /* APU<->RPU1 IPI mailbox controller */ ipi_mailbox_rpu1: mailbox@ff990640 { reg = <0xff3f0b00 0x20>, <0xff3f0b20 0x20>, <0xff3f0940 0x20>, <0xff3f0960 0x20>; reg = <0xff990600 0x20>, <0xff990620 0x20>, <0xff9904c0 0x20>, <0xff9904e0 0x20>; xlnx,ipi-id = <2>; } reg-names = "local_request_region", "local_response_region", "remote_request_region", "remote_response_region"; #mbox-cells = <1>; xlnx,ipi-id = <4>; }; }; };

If the device tree is properly decoded and the Linux kernel is instrumented with the openamp logistics on the /var/log/messages log file you should find something like :

root@korolev:~# grep -e ipi -e r5 /var/log/messages [ 2.802318] zynqmp-ipi-mbox mailbox@ff990400: Probed ZynqMP IPI Mailbox driver. [ 2.810000] zynqmp-ipi-mbox mailbox@ff90000: Probed ZynqMP IPI Mailbox driver. [ 2.813486] zynqmp-ipi-mbox mailbox@ff3f0b00: Probed ZynqMP IPI Mailbox driver. [ 41.327057] zynqmp_r5_remoteproc ff9a0000.zynqmp-rpu: RPU core_conf: split [ 41.340320] r5@0: DMA mask not set [ 41.341289] r5@0: assigned reserved memory node rproc0dma_@3ed400000 [ 41.356991] remoteproc remoteproc0: r5@0 is available [ 41.364092] r5@1: DMA mask not set [ 41.365118] r5@1: assigned reserved memory node rproc@3ee40000 [ 41.374240] remoteproc remoteproc1: r5@1 is available

Deployment

SysFs

The kernel requires settling the path, the entry on /sys/module/firmware_class/parameters to specify when the CPU elf is located. This state is shared with other firmware stacks (Wireless,Ethernet,Bluetooth,fpga_manager...)

# echo <new_firmware_path> > /sys/module/firmware_class/parameters/path

# echo <YourRemoteImage.elf> > /sys/class/remoteproc/remoteproc<X>/firmware

# echo <start,stop> > /sys/class/remoteproc/remoteproc<X>/state

root@korolev # echo TestingBMCa9.x > /sys/class/remoteproc/remoteproc0/firmware root@korolev # echo start > /sys/class/remoteproc/remoteproc0/state [ 5472.230203] remoteproc remoteproc0: powering up SlaveBMA91 [ 5473.047240] remoteproc remoteproc0: Booting fw image TestingBMCa9.x, size 3353388 [ 5473.366092] virtio_rpmsg_bus virtio0: rpmsg host is online [ 5473.407239] remoteproc remoteproc0: registered virtio0 (type 7) [ 5473.408373] remoteproc remoteproc0: remote processor SlaveBMA91 is now up

root@korolev:/lib/firmware# cat /proc/cpuinfo processor : 0 model name : ARMv7 Processor rev 0 (v7l) BogoMIPS : 1136.64 Features : half thumb fastmult vfp edsp neon vfpv3 tls vfpd32 CPU implementer : 0x41 CPU architecture: 7 CPU variant : 0x0 CPU part : 0xc09 CPU revision : 0 Hardware : Xilinx Zynq Platform Revision : 0000 Serial : 0000000000000000

root@korolev:/lib/firmware# echo stop > /sys/class/remoteproc/remoteproc0/state [ 348.974761] remoteproc remoteproc0: stopped remote processor SlaveBMA91 root@korolev:/lib/firmware# cat /proc/cpuinfo processor : 0 model name : ARMv7 Processor rev 0 (v7l) BogoMIPS : 1136.64 Features : half thumb fastmult vfp edsp neon vfpv3 tls vfpd32 CPU implementer : 0x41 CPU architecture: 7 CPU variant : 0x0 CPU part : 0xc09 CPU revision : 0 processor : 1 model name : ARMv7 Processor rev 0 (v7l) BogoMIPS : 1156.71 Features : half thumb fastmult vfp edsp neon vfpv3 tls vfpd32 CPU implementer : 0x41 CPU architecture: 7 CPU variant : 0x0 CPU part : 0xc09 CPU revision : 0 Hardware : Xilinx Zynq Platform Revision : 0000 Serial : 0000000000000000

#!/bin/sh C4='\033[00;34m' C5='\033[00;32m' BD='\033[00;1m' RC='\033[0m' auditor="${C5}`basename $0`:${RC}" export LANG="" printf "\n" date elf_image=$1 [ -z "$elf_image" ] && elf_image="image_echo_test"; target="remoteproc0" rproc_path="/sys/class/remoteproc/${target}" current_firmware="`cat ${rproc_path}/firmware`" [ ! "$?" == 0 ] && printf "${auditor} <${C4}${target}${RC}> undefined remote processor structure ${C4}@`hostname`${RC}\n\n" && exit -1; if [ "$1" == "recycle" ]; then printf "${auditor} ReCycling <${C4}${target}:${current_firmware}${RC}> processor\n" echo stop > ${rproc_path}/state echo start > ${rproc_path}/state exit 0; fi [ ! -f "/lib/firmware/${elf_image}" ] && printf "${auditor} ${elf_image} No such file\n" && exit -2; printf "${auditor} firmware on ${C4}${target}${RC}(/lib/firmware/) -> ${C5}`cat ${rproc_path}/firmware`${RC}\n" printf "now re-addressed to -> ${C5}${elf_image}${RC}\n" [ "`cat /sys/class/remoteproc/remoteproc0/state`" == "running" ] && echo stop > ${rproc_path}/state; echo ${elf_image} > ${rproc_path}/firmware echo start > ${rproc_path}/state tmp_file="./openamp.`whoami`.log" hostname="`hostname`" grep virtio /var/log/messages | tail -15 | sed 's/user.info kernel//g' > ${tmp_file} printf "`tail -7 ${tmp_file}`\n"

Auto

The remote processor can be automatically booted during platform boot using auto_boot flag settled on the device tree specification. This approach requires :

- The filesystem on the platform Linux must be available before the remoteproc driver be probed.

- The firmware must be present in /lib/firmware before the remoteproc driver be probed.

- The firmware must be named as rproc-%s-fw, where %s corresponds to the name of the remoteproc node in the device tree.

For example, named the firmware as rproc-SlaveBMA91-fw, the device tree entry must be defined as :

SlaveBMA91 { compatible = "xlnx,zynq_remoteproc"; [...] status = "okay"; auto_boot; };

EarlyBoot

- Using this approach, the slave/co-processor is started by U-Boot before the platform Kernel is deployed. This could be a very handful alternative on applications with hard constraints since boot time, so they control the critical aspects of the application with minimal delay.

- On the platform boot, the remoteproc framework attaches itself to the co-firmware by parsing the run time device tree patched by u-Boot. The usability of this environment is fully explained here.

Linux RemoteMsg

- RPmsg is a virtio-based messaging bus that allows the Linux kernel drivers to communicate with remote processors available on the system, drivers expose appropriate user space interfaces on such communications, if needed.

- Each RPmsg device is a communication channel with a remote processor. Channels are identified by a textual name, a local address(32b) and a remote address(32b). A driver listening on a channel binds its rx_callback with a unique local address. The RPmsg core dispatches them to the appropriate driver according to their destination address.

- Further information related with the Kernel API can be consulted here

- The OpenAMP framework provides the next application references on its github reference

- The echo-test example has been taken as a case of study. It has been refactored using a modern c++ approach with the c++17 standard. Complete sources can be found here.

LibMetal

Libmetal provides common user APIs to access devices, handle device interrupts and request memory across the following operating environments:

- Linux user space (based on UIO and VFIO support in the kernel)

- RTOS (with and without virtual memory)

- Bare-metal environments

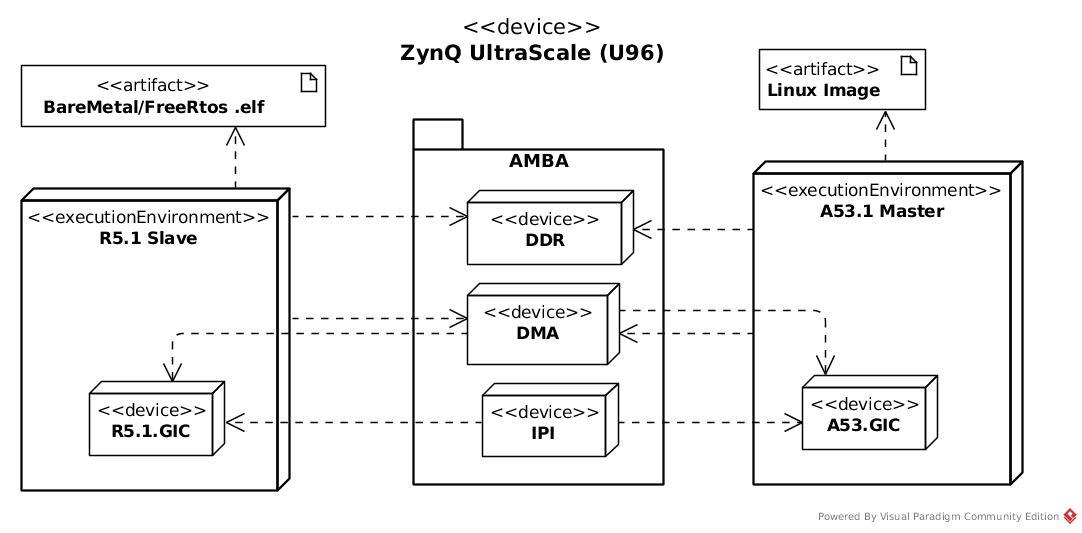

Further description on this component is found here. All the examples provided by the master branch target the R5 processor of the ZynQMP ultrascale SOC.

Linux Device Trees for the libmetal deployment

All the memory used by the reference demos is mapped onto the DDR space. The libmetal make it accessible onto the Linux user space using the uio driver model.

Some parts of the libmetal demo demonstrate how to use the IPI(Inter Processor Interface) of the ZynQMP SOC could to enhance the control/performance of the AMP application.

The R5 .elf demo firmware maps the metal device software entity on the interrupt registers addressed on 0xFF31_0000 and use the GIC vector 65 to process any kind on activity interrupt. So, it uses the IPI channel 1 to interact with the APU device. These settings could be tweaked on the sys_init.c source code if any device mapping collision is sampled on the APU hardware resources map.

The R5 metalised isr ipi_irq_handler foreach demo operates on the incomming interrupt through the mask IPI_MASK valued as 0x01000000 on the interrupt status register offset IPI_ISR_OFFSET.0x10 this masking applies on the 24th bit. So, it's expected that the external device, aka the APU, kicks the mechanism on the IPI channel 7 which is defaulted for the first PL channel assigned to the IPI module aka the PL IPI 0 (according with the UG1087 documentation reference).

In order to test the demos provided, the Linux device tree root entry must be polluted with something like

/ { shm@0 { compatible = "shm_uio"; reg = < 0x00 0x3ed80000 0x00 0x1000000 >; }; shm_desc@1 { compatible = "shm_uio"; reg = < 0x00 0x3ed00000 0x00 0x10000 >; }; shm_desc@2 { compatible = "shm_uio"; reg = < 0x00 0x3ed10000 0x00 0x10000 >; }; shm_dev_name@3 { compatible = "shm_uio"; reg = < 0x00 0x3ed20000 0x00 0x10000 >; }; /* APU maps the RPU interactions using the IPI.7 channel mapped on the GIC vector(61)-32 = 29 = 0x1d */ /* Interrupts are mapped on 0xff340000 and communication buffers on 0xff990a00 */ ipi@ff340000 { compatible = "ipi_uio"; reg = < 0x00 0xff340000 0x00 0x1000 >; interrupt-parent = < 0x04 >; interrupts = < 0x00 0x1d 0x04 >; }; timer@ff110000 { compatible = "ttc_uio"; reg = < 0x00 0xff110000 0x00 0x1000 >; }; }

So, the device tree entry ipi@ff340000 maps on the Linux uio framework, the interrupts registers of the IPI channel 7 routed to the GIC vector 61 according the UG1185 reference note.

The timer@ff110000 entry maps on the Linux layer uio framework the TCC 0(Triple Timer Counter) registers memory window to properly perform accuracy measurements of the different scenarios

A complete device tree ready to work with the U96 UltraScale and the metal demos is provided here. The Remote Processor 0 resource zynqmp_ipi1 has been modified from the original ipi channel7 to channel8 to allow standard operation with the git sources provided on the libmetal demos.

LibMetal reference examples

The R5 .elf image can be deployed using the Xilinx-SDK or downloaded from here

We have refactor with the c++17 features the Linux and BareMetal applications to learn how to deploy an application on top of the libmetal API

Echo Testing

This demo demonstrates the use of shared memory between the APU(Application Processor Unit,A53.0) and the RPU(Remote Processor Unit,R5.0) available on the ZynQMP SOC resolving a echo problem.

The Device tree entry shm@0 is used on the shmem_demo.cpp

The proposed protocol is the following

- The APU opens the shared memory metal device clearing the memory areas related with the transaction control. Afterwards, it notifies the RPU firmware that a transaction will be done.

- APU writes a message pattern to the shared memory and will increase the TX avail values in the control area to notify the RPU there is a message ready to read.

- APU will poll the RX available offset in the shared memory control area to see if the RPU has echoed back the message into the shared memory

- When the APU samples enough bytes it will read the RX message from the shared memory, verifying that pattern matches the current response.

- Release everything requested to the metal layer to resolve the transaction.

So, the shared memory structure is like this :

|0 | 4Bytes | demo control/status activity | |0x04 | 4Bytes | number of APU to RPU buffers available to RPU | |0x08 | 4Bytes | number of APU to RPU buffers consumed by RPU | |0x0c | 4Bytes | number of RPU to APU buffers available to APU | |0x10 | 4Bytes | number of RPU to APU buffers consumed by APU | |0x14 | 1KBytes | APU to RPU buffer | |0x800 | 1KBytes | RPU to APU buffer |

Atomics with the IPI support

The shmem_atomic_demo.cpp will address control capabilities on light APU/RPU transactions

- APU open the shared memory and IPI metal devices. A user space ISR(Interrupt Service Routine) is registered for the ipi device.

- APU kicks the IPI channel to notify the other end to start the demo

- APU start atomic add by 1 for 5000 times over the shared memory

- APU waits for remote IPI kick to know when the RPU has finished its demo part

- Verify the result. As two sides both have done 5000 times of adding 1 check if the end result is 5000*2.

- Clean up every metal resource reserved

Shared Memory Echo using the IPI support

The ipi_shmem_demo.c will allow maximum concurrency on the APU/RPU transactions using atomics/IPI operating on Shared memory chunks and buffers.

- Open shared memory and IPI metalled devices

- Write message to the shared memory.

- Kick RPU with the IPI to notify there is a message written to the shared memory

- Wait until the remote has kicked the IPI to notify the remote has echoed back the message.

- Read the message from shared memory and try to verify it.

- Loop this protocol a fixed number of iterations

- Clean up every metal resource reserved

Latencies Measuring

The ipi and shared memory latencies are measured with the ipi_latency_demo.c and shmem_latency_demo.c modules

BareMetal/FreeRTOS support

- The current Xilinx BSP implements openAMP on top of the libmetal library.

- When compilig the BSP, the target processor must be defined using a specific -D compile specification coupled to the openAMP package. For example if the .elf is targeted on the PU1 all the stuff must be compiled with -DUSE_AMP=1.

- The reference applications provided by Petalinux/OpenAMP for the ZynQ processors can be downloaded here for the A9 platform and here for the R5 one.

- Sources can be as well be easily rebuilt either using this Xilinx-SDK or the Vitis one.

- The echo-test has been taken as a case of study. It has been refactored using a modern c++ approach with the c++17 standard, sources can be found here for the A9 processor and here for the R5 one.

OpenAMP reference examples

Echo

-

This test Linux application creates an remote processor endpoint which is associated to the remote processor message published by the remote processor firmware. The remote firmware .elf loading process is be done externally because it is not part of the application intent.

-

The application algorithm is isolated on the symbol endPointTesting. The dynamic structure _payload will be injected to and retrieved from the remote processor to configure the transaction parameters

struct _payload { unsigned long id; unsigned long port_size; uint8_t data[]; };

- The point is that for any interaction with the remote processor, the port_size is incremented, field id tags the interaction and the message bulk data is filled with the binary pattern 0b01011010/0x5a. So different payloads can help to measure the system bandwidth and throughput.

- The target memory is the DDR segment which is instanced as reserved on the device tree.

- The transaction is verified requesting to the remote processor the current state and checking that incoming data matches the pattern sent.

User terminal output on a proper execution is like below Where we have limited the payload stretch up to 32 bytes

root@popov:/lib/firmware# /opt/shared/bin/openAMP/echo_test.a9.662289b.x Tue Apr 7 21:49:05 2020 payload.id( 0)( ack.sized as 9 vs 9 )( 1 ) payload.id( 1)( ack.sized as 10 vs 10 )( 1 ) payload.id( 2)( ack.sized as 11 vs 11 )( 1 ) payload.id( 3)( ack.sized as 12 vs 12 )( 1 ) payload.id( 4)( ack.sized as 13 vs 13 )( 1 ) payload.id( 5)( ack.sized as 14 vs 14 )( 1 ) payload.id( 6)( ack.sized as 15 vs 15 )( 1 ) payload.id( 7)( ack.sized as 16 vs 16 )( 1 ) payload.id( 8)( ack.sized as 17 vs 17 )( 1 ) payload.id( 9)( ack.sized as 18 vs 18 )( 1 ) payload.id( 10)( ack.sized as 19 vs 19 )( 1 ) payload.id( 11)( ack.sized as 20 vs 20 )( 1 ) payload.id( 12)( ack.sized as 21 vs 21 )( 1 ) payload.id( 13)( ack.sized as 22 vs 22 )( 1 ) payload.id( 14)( ack.sized as 23 vs 23 )( 1 ) payload.id( 15)( ack.sized as 24 vs 24 )( 1 ) payload.id( 16)( ack.sized as 25 vs 25 )( 1 ) payload.id( 17)( ack.sized as 26 vs 26 )( 1 ) payload.id( 18)( ack.sized as 27 vs 27 )( 1 ) payload.id( 19)( ack.sized as 28 vs 28 )( 1 ) payload.id( 20)( ack.sized as 29 vs 29 )( 1 ) payload.id( 21)( ack.sized as 30 vs 30 )( 1 ) payload.id( 22)( ack.sized as 31 vs 31 )( 1 ) payload.id( 23)( ack.sized as 32 vs 32 )( 1 ) bool endPointTesting(int, int)Round 0 err_count(0)`

Matrix

Proxy

Updated